The Spotlight Effect: How AI Learns to Focus

What if computers could decide what's important just like we do? AI's attention mechanism shines a computational spotlight on what matters similar to how your brain ignores the sensation of your shoes until someone mentions them. Is this artificial spotlight the key to context?

Today's Focus

This "attention" concept keeps swirling around in my mind. It popped up everywhere in last week's research about artificial neurons, connecting to those "otherwise zero" parts of equations. What exactly does "attention" mean in a system made of math? How do tensors and vectors somehow develop the ability to focus? (And isn't it fascinating how we anthropomorphize mathematical operations with such human terms?)

When we pay attention, our consciousness shifts - we amplify certain signals while dampening others. Your brain automatically filters the important stuff - these words - from background noise, physical sensations, even your own breathing [9]. But AI systems don't have consciousness! They're just operations unfolding across silicon. So how does a matrix multiplication learn to care about some values more than others?

I keep picturing attention as this spotlight moving across a landscape of numbers [8], illuminating some while leaving others in shadow. Who - or what - moves that spotlight? And why does it shine where it does? Let's pull this thread and see where it leads us.

Learning Journey

Attention is how AI models decide what parts of input data actually matter [6]. The need for this mechanism arose from a fundamental limitation in early neural networks - everything the model processed, whether a tweet or a novel, got compressed into a single fixed-length vector. A SINGLE vector! The infamous "fixed-length bottleneck" problem [6] - trying to squeeze War and Peace through the same tiny straw you'd use for a haiku.

Information got lost. Tons of it. Early words just... faded away... by the time the model reached the end of longer texts. Imagine reading the final chapter of a book with only a fuzzy recollection of the first chapter - no ability to flip back.

Humans don't memorize entire books perfectly. We flip back to earlier chapters. We maintain connections between ideas even when they're pages apart. We create mental links across distance and time.

That's essentially what attention mechanisms do. They create pathways between different parts of input data. Models can look back at the ENTIRE input at each step of processing [5][6]. Direct connections form between related elements regardless of distance.

AI could suddenly connect "it" with the noun it referred to from paragraphs earlier. Maintain context for accurate translation of complex sentences [4]. Remember what happened at the beginning of a text!

How does this magic work? The 2017 paper "Attention is All You Need" [4] introduced the "Query-Key-Value" (QKV) framework - a brilliantly intuitive system mirroring how we search for information.

Think of searching in a library:

- A QUERY: "I need books about neural networks"

- KEYS: index cards, catalog systems, shelf markers

- VALUES: the actual books with the information

In processing text, each word (or token) transforms into three representations:

- A query vector: what information do I need right now?

- A key vector: what information do I contain that might be useful?

- A value vector: here's my actual content

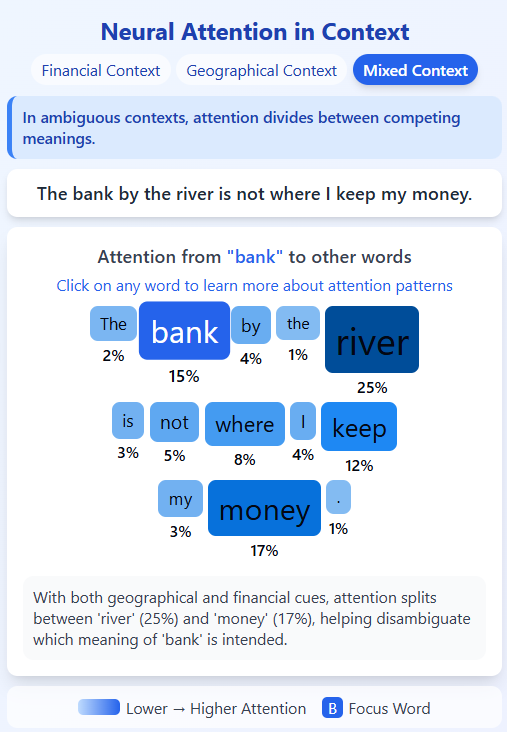

The word "bank" in "The bank is by the river" needs disambiguation. Is it financial or riverbank? The model calculates relevance scores between words through dot products measuring how "aligned" vectors are [4]. "River" becomes super relevant to understanding "bank" while "the" barely registers.

These scores transform through a "softmax" function - converting numbers to percentages that add up to 100% [11]. "River" might get 65% relevance weight while "the" gets 5% [16]. These weights determine how each word's information contributes to understanding other words.

We can actually SEE this happening! Visualization tools show these attention patterns as heat maps or connection lines [7][13]. A window into machine cognition.

"The animal didn't cross the street because it was too tired." When processing "it," visualizations show attention focusing strongly on "animal" [1]. The model figuring out what "it" refers to - something early AI systems completely failed at.

Function words (like "the," "a," "and") typically receive less attention than content words (nouns, verbs, adjectives) [1]. Just like humans! We don't fixate on "the" - we focus on meaning-carrying words.

Different attention "heads" specialize in different linguistic relationships [1]. Some track syntax, others semantics, others connect pronouns with their antecedents. A team of specialists examining text from multiple angles simultaneously.

Attention mechanisms unleashed capabilities we'd never seen before. Early attention-based models improved by over 2 BLEU points compared to previous approaches [4], with some variations achieving gains of 5.0 BLEU points [3]. Massive in machine translation terms.

Models could handle longer texts without degrading - maintaining performance even with 50+ word sequences where previous approaches collapsed [6]. Training times dropped from "several days" on specialized hardware to "3.5 days on eight GPUs" [4]. Architectures simplified while achieving better results - ditching complicated recurrence and convolution operations entirely [4].

Multi-head attention runs 8 or 16 attention mechanisms in parallel. Some heads focus on nearby words while others track long-distance relationships. Some analyze grammar while others track meaning. Together building a much richer understanding than any single attention mechanism could [17].

Attention stands as THE pivotal innovation enabling the current language AI revolution. In virtually every major language model today, including the one processing these very words.

My Take

What blows my mind most about attention mechanisms is how they bridge the gap between cold math operations and something resembling human-like understanding. AI attention isn't the same as human attention (it lacks the biological and conscious aspects), but the parallels are uncanny [62][69].

Both human and machine attention do similar things: they filter out irrelevant information, focus computational resources on what matters, and enable understanding of relationships across distance and time. The difference is human attention emerged through evolution over millions of years, while machine attention was designed through mathematical principles in just the past decade!

Attention visualization feels like a window into AI "thinking." When we see attention patterns highlight the connection between "it" and "animal" in a sentence, we're witnessing something that feels almost cognitive—a machine identifying relationships between concepts. This makes attention not just a powerful technical tool but also an incredible instrument for explainability in AI.

Where does this go next? Will we see new variations that better mimic human cognitive processes? Will attention be applied beyond language and vision? How might brain science inform the next generation of attention architectures?

Understanding attention has completely changed how I think about AI. These aren't just statistical pattern matchers anymore; they're dynamic systems that can focus, prioritize, and make connections—capabilities that feel much closer to genuine intelligence.

Resource of the Day

How does AI know when "bank" means financial institution versus riverbank? It's all about attention - those invisible neural spotlights that connect words into webs of meaning!

Our Neural Attention Visualizer reveals these hidden patterns. Watch "money" capture 52% of attention in financial contexts while "river" claims 58% in geographical ones. Click any word to discover counterintuitive insights about how neural networks handle ambiguity and resolve competing meanings.

Peek inside the cognitive machinery of AI understanding. You'll never look at language models the same way again!

References

1. Alammar, J. (2018). "The Illustrated Transformer". Visual explanation of transformer architecture and attention mechanisms.

2. Vig, J. (2019). "BertViz: A tool for visualizing attention in Transformer-based language models". GitHub repository for attention visualization tool.

3. Luong, M. T., Pham, H., & Manning, C. D. (2015). "Effective Approaches to Attention-based Neural Machine Translation". Research on local attention mechanisms.

4. Vaswani, A., et al. (2017). "Attention is All You Need". The original transformer paper introducing the architecture.

5. Bahdanau, D., Cho, K., & Bengio, Y. (2014). "Neural Machine Translation by Jointly Learning to Align and Translate". Early paper introducing attention mechanisms.

6. Bahdanau, D., Cho, K., & Bengio, Y. (2014). "Neural Machine Translation by Jointly Learning to Align and Translate". The paper that introduced attention mechanisms to neural machine translation.

7. Comet. (2025). "Explainable AI: Visualizing Attention in Transformers". Guide to transformer attention visualization.

8. Chiu, A. (2023). "Deep Dive into Self-Attention by Hand". Explaining attention with the spotlight analogy.

9. IBM. (2025). "What is an attention mechanism?". Overview of attention in machine learning.

10. Nabil, M. (2023). "Unpacking the Query, Key, and Value of Transformers: An Analogy to Database Operations". Explaining QKV with database analogies.

11. 3Blue1Brown. (n.d.). "Visualizing Attention, a Transformer's Heart". Visualizing attention mechanisms.

12. Yeh, C., et al. (n.d.). "AttentionViz: A Global View of Transformer Attention". Tool for visualizing attention patterns.

13. Vig, J. (n.d.). "BertViz: Visualize Attention in NLP Models". GitHub repository for attention visualization.

14. Lindsay, G. W. (2020). "Attention in Psychology, Neuroscience, and Machine Learning". Comparing attention across disciplines.

15. Chen, H., et al. (2020). "What Can Computational Models Learn From Human Selective Attention?". Comparison of human and computational attention.

16. Affor Analytics. (2024). "Transformers in finance: an attention-grabbing development". Example of attention in word disambiguation.

17. Data Science Dojo. (2024). "An Introduction to Transformers and Attention Mechanisms". Multi-headed attention explanation with bank example.