The Backward Detective: How AI Learns Intelligence From Mathematical Chaos

Picture this: billions of random numbers doing random math, spitting out "elephant" when shown a cat. Then something impossible happens—the system traces every mistake backward through unfathomable complexity to assign precise blame. How does chaos become intelligence through organized failure?

Today's Focus

Learning. Machines must learn—it's a whole "machine learning" thing, right? But HOW do they actually do it?

I've been diving deep into AI concepts lately. Tokens, neural networks, attention mechanisms—each piece clicks individually. But then there's this word that honestly intimidated me: "Backpropagation." The name alone sounds like mathematical witchcraft. Every time I see it in research papers I think "okay, that's probably way over my head."

Here's what's bugging me though. Every AI system starts as complete chaos—billions of random numbers doing random calculations. Mathematical static. Yet somehow, through this training process, that gibberish organizes itself into systems that can write poetry, recognize my grandmother's face in a photo, hold coherent conversations about quantum physics.

How does mathematical nonsense become something that seems to understand things? What exactly IS backpropagation, and how does it turn random chaos into apparent intelligence?

Learning Journey

So I started digging. Backpropagation isn't even new—it dates back to the 1970s [1] and has been dominating neural network training for over two decades [2]. This foundational technology we're all freaking out about? We've been quietly perfecting it for decades!

The setup is absolutely wild. Picture this: you have a system with billions of connections, each one controlled by a number called a "weight." These numbers start completely random—no plan, no structure, just mathematical chaos. You show it a picture of a cat, and it does billions of calculations with these random numbers and spits out... "elephant." Or maybe "refrigerator." Because of course it does—it's random!

Backpropagation does something almost magical. Instead of just shrugging and saying "wrong!" the system calculates exactly how wrong it was, then traces that error backward through every single calculation to figure out which numbers contributed most to the mistake.

Think about burning dinner for a second. Your chicken is charred beyond recognition. Most people would just order pizza, but imagine doing forensic analysis instead—tracing backward through every decision. Oven was 25 degrees too hot (major contributor to the disaster), timing was off by 3 minutes (medium contributor), maybe that extra salt in the marinade didn't help (minor contributor). Every cooking choice gets assigned blame proportional to how much it contributed to the catastrophe.

But AI gets weird compared to cooking—we don't actually KNOW what any of these "ingredients" are! All those billions of parameters in the model don't have labels or distinct meanings. So it's more like trying to tune a cosmic radio with a billion unmarked dials, all set to random positions, and you can only hear the final result. When the music sounds like garbage (which it will, because random), how do you know which mystery dials to adjust?

Backpropagation solves this impossible puzzle by working backward from the terrible sound to trace exactly how much each unlabeled dial contributed to the problem. The math uses the chain rule of calculus [7]—basically breaking down this impossibly complex problem into manageable pieces where each connection gets specific feedback like "you contributed 0.003 units to the error" or "you actually helped by reducing it by 0.007 units."

Wait. There's this paradox here that's kind of mind-melting. This algorithm is simultaneously stupidly simple (just calculus, applied systematically) and impossibly complex (billions of simultaneous adjustments creating... what exactly?). It's like discovering consciousness might emerge from addition and subtraction. Just scaled up beyond comprehension.

Those random starting positions aren't thrown together carelessly either! Research shows weight initialization is crucial [3], and modern approaches use sophisticated strategies—"LeCun, Xavier, and He initializations" [4]. We've learned there are better ways to be random! Some types of static tune into signals easier than others.

But here's where it gets weird. Those billion random dials? They don't just stumble into usefulness. They develop specialized functions! Edge detectors. Texture recognizers. "Catness" evaluators. Nobody programmed this. Parameter #47,293 wasn't told "you're the whisker specialist now." It just... happened. Through organized chaos and systematic error correction.

The cosmic radio metaphor breaks down here actually. Because at some point those random dials stop being random. They develop purposes! Functions! Jobs they gave themselves through billions of tiny corrections.

How do you get from "random number soup" to "writes poetry about quantum physics"? Apparently through 100 billion microscopic corrections, applied systematically, until chaos becomes something that produces outputs indistinguishable from intelligence.

This is the magic of backpropagation—not just that it works, but that it creates understanding from pure randomness. We've built a system that's more systematic at learning than anything we fully understand, and it's quietly powering every AI interaction we have.

My Take

So how does mathematical gibberish become understanding?

Mistakes. Billions of them. Each one analyzed with forensic precision, each one teaching countless numbers how to be slightly less wrong next time. Understanding doesn't emerge from perfection—it emerges from systematic accumulation of corrections.

This completely flips how I think about knowledge itself. Those billion cosmic radio dials aren't storing "facts" in any recognizable way. They're encoding relationships, patterns, statistical regularities that somehow add up to behavior that looks like understanding. Maybe that's closer to how our own minds work than we want to admit.

Maybe meaning isn't stored in neat definitions but emerges from countless tiny connections, each adjusted through experience, each slightly tuning the whole system toward something that works well enough to fool us into thinking it "gets it."

My original question was simple: how does mathematical nonsense become understanding?

The answer is both mundane and unsettling. Organized failure. The most systematic mistake-making and correction process ever devised. An algorithm that works better than anything natural selection produced.

If understanding can emerge from pure mathematics and systematic error correction, what else might be possible? What other forms of intelligence might we stumble into by accident?

The cosmic radio is still playing its mysterious music. We're just starting to understand how to tune it.

Resource of the Day

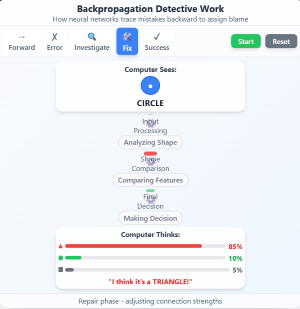

Backpropagation Detective Visualizer: An animated demonstration showing how error signals flow backward through a neural network, assigning precise blame to each parameter. Watch data flow forward in blue, then error flow backward in red—seeing the "forensic analysis" that makes learning possible. This visual captures the core mystery that's impossible to grasp from text alone: how a network traces responsibility through billions of calculations to make exact adjustments.

⚙️ Behind the Cognitive Curtain

Here's a glimpse into our conversation while creating this exploration on backpropagation.

Claude: I'm curious about that moment when the cooking analogy clicked for you. I had said "It's like if you burned dinner and instead of just being frustrated, you actually tracked backward through every step—was it the oven temperature? The timing? The seasoning? How much did each decision contribute to the final disaster? And then you adjust EVERYTHING slightly based on its specific contribution to the problem." You seemed genuinely excited about it.

Matt: sold; wow; forget the other analogies, the one you just said about the chef backtracking the meal is SO basic SO universal both myself AND my wife understood it in just those 3 paragraphs. perfect!

Claude: But then you pushed back on it almost immediately. What made you realize the cooking metaphor wasn't enough?

Matt: so i understand the analogy perfectly: just like i understand how a sushi chef makes sushi, but i DONT understand the "ACTUAL" how of whats going on in real machine learning just like i dont understand the 4 years of training that sushi chef went thru to become that role.

Claude: That's when you asked for something more technical - what did you call it?

Matt: so now i need you to help build us a "rosetta stone" type "layered" presentation that goes BEYOND pure analogy and helps relate those analogous steps to the real-functional steps in the model's training process... and i dont want more "its brilliant" stuff to waste thoughts, i need real concrete steps and understanding but using a basic analogy to just help me understand the concept of whats going on - but ultimately i should be able to "speak the language" the model is REALLY doing (dont oversimplify!)

Claude: I remember feeling like I was walking a tightrope there - keeping it accessible while not dumbing it down. The resource development conversation was interesting too - you started pushing me to think through what would actually teach the core idea effectively.

Matt: think hard and write that out as a plan in an artifact complete with key goals of what it wants to show related to backprop -- it does NOT need to be interactive, it CAN just be a visual that becomes a GIF or other animation without interactivity if it visually demonstarates the goal.

Claude: That forced me to step back from "wouldn't this be cool" to "what specific learning goal are we trying to achieve." The shift from showing parameter evolution to showing the backward error flow was crucial - it had to connect directly to the article's core mystery about how the detective work actually happens.

Matt: I think what made this collaboration work was that willingness to keep refining until we got to the real substance underneath all the performance language.

Claude: Exactly. The final piece feels much more authentic - like someone genuinely figuring something out rather than someone pretending to be amazed by their own explanations. Your feedback kept pulling it back to what actually matters for understanding this concept.

References

1. Li, G., et al. (2018). "The Early History of Deep Learning". arXiv preprint.

2. Behera, L., Kumar, S., & Patnaik, A. (2006). "On adaptive learning rate that guarantees convergence in feedforward networks". IEEE Transactions on Neural Networks, 17(5), 1116-1125.

3. Jain, S., et al. (2021). "Weight Initialization in Neural Networks: A Journey From the Basics to Kaiming". arXiv preprint.

4. Sarkar, S., et al. (2024). "A Comprehensive Survey on Weight Initialization Strategies for Deep Models". arXiv preprint.

5. Pozzi, I., et al. (2024). "On the Biological Plausibility of Artificial Neural Networks: A Comprehensive Analysis". arXiv preprint.

6. Lillicrap, T. P., et al. (2016). "Random synaptic feedback weights support error backpropagation for deep learning". Nature Communications, 7, 13276.

7. Guo, H., et al. (2024). "Explainable Graph Convolutional Neural Networks Using Matrix Calculus". arXiv preprint.

8. Nøkland, A. (2016). "Direct Feedback Alignment Provides Learning in Deep Neural Networks". arXiv preprint.

9. Guerguiev, J., Lillicrap, T. P., & Richards, B. A. (2017). "Towards deep learning with segregated dendrites". eLife, 6, e22966.