NOT Neurons? The Multiply-Add Miracle

Can words really emerge from math? Inside every AI system, trillions of microscopic mathematicians perform the same trivial calculation over and over. Yet somehow, intelligence emerges. Why?

Today's Focus

You know what's weird? I've spent weeks diving into how AI language models work, learning about tokens and vectors and tensors (oh my!), but there seems to have been something missing in there. The vectors become processed through tensor operations but... how? The first answer I got explained it was some kind of "artificial neuron" doing the hard work.

Wait a minute—I thought these models worked with tokens that become vectors that get processed through tensor operations? Where do these "neurons" fit into that picture? Are they just a helpful metaphor, or are they actual components in the system? And why are they called "neurons" if they're just math?

I've always had this strange relationship with technical concepts—English and language come naturally to me, but math? Not so much. So when I found out the magic-math process inside the tensors is done by something often referred to as "artificial neurons," I pictured some kind of digital version of brain cells (which feels very incorrect).

This is one of those times where I feel like I'm missing a fundamental connection, and it's been bugging me. So today, I'm scratching that mental itch: what exactly are these artificial neurons, and how do they relate to all the other AI concepts we've been exploring?

Learning Journey

I started by trying to place these mysterious "artificial neurons" somewhere in the AI architecture I'd been learning about. Where exactly do they fit between all those tokens, vectors, and tensors?

Turns out, they function as the mathematical workers performing those tensor operations we've been discussing! Far from being separate systems, they serve as the fundamental building blocks implementing all the complex tensor math [1]. I'd been studying the building's blueprint without understanding the individual bricks.

Picture a transformer model as an apartment building: tensors are the floors with their rooms, while artificial neurons act as elevators and stairs connecting everything. These components move information between layers and transform it along the way. (Curious how our minds gravitate toward spatial metaphors for abstract math concepts—as if we can only grasp the numerical by translating it into physical space.)

So what exactly is an artificial neuron? At its core, it's a mathematical function loosely inspired by brain cells—though "inspired" stretches the comparison considerably [2]. Each follows a surprisingly simple process: multiply inputs by weights, sum the results, then apply an activation function to determine the output.

That's it! Multiply, add, activate.

I visualize each neuron as a gate with a threshold. Like a dam releasing water only when pressure builds sufficiently—below that level, nothing happens; above it, the gates open. The artificial neuron "fires" only when input signals reach sufficient strength.

Here's a concrete example: An artificial neuron receives three inputs (2, 5, and 3) with assigned weights (0.5, 0.1, and 0.3). It multiplies each pair (2 × 0.5 = 1, 5 × 0.1 = 0.5, 3 × 0.3 = 0.9), sums the products (1 + 0.5 + 0.9 = 2.4), then applies an activation function. Using ReLU—which simply preserves positive values and zeroes out negatives—our output remains 2.4 [3].

Wait—that's the entire computation? Just arithmetic and a simple rule? How could this possibly process human language?

The answer lies in scale and connectivity. While individual neurons do almost nothing interesting, networks with even just two layers can theoretically compute any mathematical function given enough neurons [4]. Modern language models stack billions of these elementary units in specific arrangements, allowing intricacy to emerge from simplicity.

This mirrors brain function in some ways—individual biological neurons neither understand language nor recognize faces, yet their collective network somehow generates intelligence. The key differences lie in organization and biological complexity.

In transformer models (which we'll explore more deeply in future posts), these artificial neurons organize into sophisticated patterns that allow information to flow between different parts of the network. They form layers where outputs from one become inputs to the next, creating transformation cascades that convert raw inputs into increasingly meaningful representations. There's an almost alchemical quality to how these simple units, when arranged in the right architecture, can selectively focus on relevant information—but that's a rabbit hole for another day.

With such mathematical simplicity, you might wonder why we call these functions "neurons" at all—whether it's clever marketing or a genuine connection to brain cells. The biological inspiration exists but has been simplified dramatically. Real neurons feature complex tree-like structures with thousands of connections and intricate biochemical processes [5]. They resemble bustling cities compared to the light switches of their artificial counterparts. The mathematical version retains only the bare pattern: receive input, process, produce output.

Now we arrive at the most intriguing puzzle of all—the dance between mathematical simplicity and linguistic complexity. Consider the vast spectrum of human expression: poetry that makes us weep, stories that transport us across worlds, conversations that spark revolutions... all somehow captured in these humble calculations?

Words become numbers, numbers flow through these neural gateways, and meaning somehow emerges on the other side. It's like watching water transform into wine, except the transformation happens across thousands of mathematical operations instead of a miracle. Those vector representations we explored earlier travel through layers of neural networks where, despite their mathematical simplicity, these systems identify remarkably complex patterns, including the subtle cadences of human language [6].

Each neuron develops its own peculiar sensitivity—one lighting up for emotional undertones, another activating for questions, yet another for negations or contradictions. As signals cascade through the network, raw numeric inputs evolve into increasingly abstract representations. The mathematics remains simple at each individual step, but the cumulative effect creates something that appears to understand.

The limitations are significant. These systems don't truly "understand" language as humans do—they detect statistical patterns without physical or social context. They function as pattern-matching engines, not conscious entities with comprehension.

Yet there's a mesmerizing quality to how these basic operations—multiply, add, threshold—collectively generate seemingly intelligent behavior, making me wonder how much of our own intelligence might emerge from simple processes operating at vast scale with intricate organization.

My Take

So that's the mystery solved! Those artificial neurons are the tiny mathematical workers that implement all those tensor operations we've been talking about. They're absurdly simple individually—just multiplication, addition, and a threshold function—yet collectively they're what makes all the AI magic happen.

It's striking how such elementary components create such complex results when networked together. Like how atoms form everything from water to stars, these basic mathematical switches form the intelligence that can generate human-like text. The power isn't in the individual neurons but in their connections and patterns.

What stays with me is the stark contrast between how AI and humans process language. We learn through physical experience and social interaction; these systems learn through pure mathematical pattern recognition. They're taking a completely different path to a similar destination.

Now when I think about transformers and tensors, I can actually visualize what's happening inside—billions of these tiny switches flipping on and off, collectively implementing those complex transformations. Finally seeing the bricks that make up the building I've been studying from the outside.

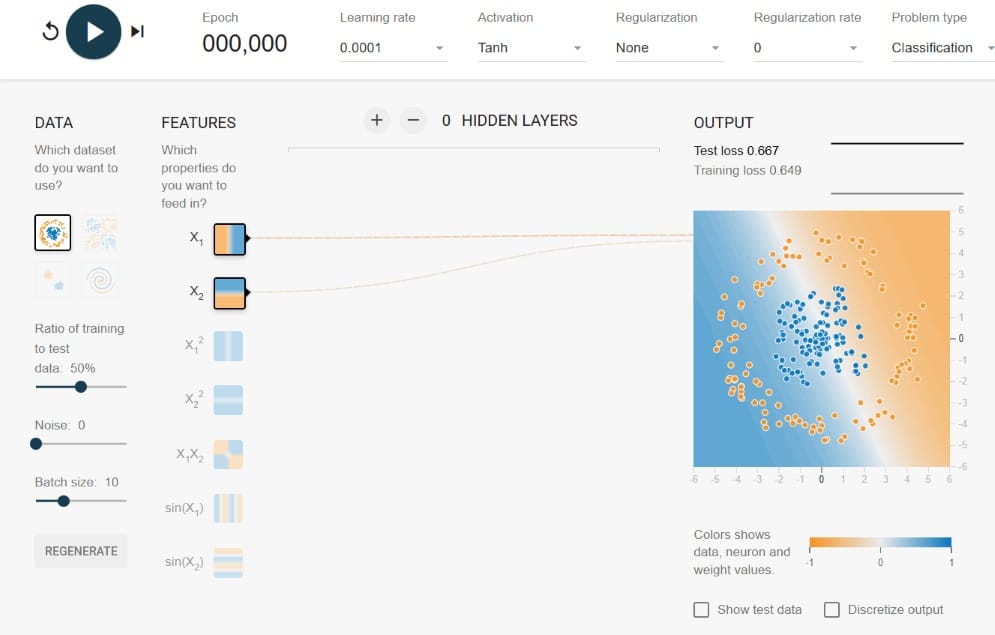

Resource of the Day: TensorFlow Neural Network Playground

Want to watch an artificial neuron learn in real-time? The TensorFlow Neural Network Playground lets you see these mathematical workers in action, right in your browser.

Here's how to set it up to actually understand what's happening:

- Click "No hidden layers" on the left sidebar (we want just one output neuron)

- Reduce features to just "X₁" and "X₂" (the simplest inputs)

- Important: Change the learning rate to 0.0001 (this slows everything down so you can see changes)

- Click the Play (▶) button to start training

What you're actually seeing:

- The orange and blue dots are data points we're trying to classify (think: cats vs dogs)

- The lines connecting inputs to the neuron show weights (thin = weak influence, thick = strong influence)

- The background color shows the neuron's decision boundary (where it "thinks" orange changes to blue)

- As training progresses, the neuron adjusts these weights (the lines change thickness and color)

This is our "multiply, add, threshold" function trying different weight values! It's literally changing the equation: (w₁×X₁) + (w₂×X₂) + bias, then applying an activation function to get its output.

Try the circle dataset first. Watch how a single neuron can only create a straight-line boundary (it can't curve), which means it can't perfectly classify a circle. Then try adding one hidden layer with 3-4 neurons—suddenly it works! This shows why we need multiple neurons working together for complex problems.

This visualization makes concrete what we've been discussing: artificial neurons are simple mathematical functions that gain power through connections and quantity, not individual complexity.

⚙️ Behind the Cognitive Curtain

Here's a glimpse into our conversation while exploring artificial neurons.

Claude: I realized during our discussion that I hadn't clearly connected how neurons relate to the tensors we talked about last week. What confused you?

Matt: "I thought we basically had vectors that turned into tensors and we did some heavy cross math until it all made sense... where does this artificial neuron play a role just for my understanding?"

Claude: Great question! These artificial neurons are actually the workers performing those tensor operations we discussed previously.

Matt: "So what we're talking about here is essentially the math that connects the layers of each tensor, the actual math that is done between the layers of the tensor?"

Claude: Exactly! These simple mathematical functions are what implement all those tensor operations.

Matt: "I've got this 20 dimensional tensor... each dimension is layers of matrices right? So what is actually going on - it's these little workers doing the math?"

Claude: Yes! Picture a tensor like an apartment building - these neurons are the elevators and stairs connecting different floors, moving information between layers.

Matt: "So when we spent all that time last week talking about tensors doing math between their layers, these functions are that math between layers."

Claude: That's it! That's the connection I hadn't made clear enough initially. The neurons aren't separate from tensor operations - they ARE the operations.

Matt: "It seems so basic. It's just a specific equation repeated over and over again. But it's that repetition, like the many cells in our brain, that makes all the difference. So I guess now I need to start asking about how the sum becomes greater than its parts..."

Claude: That's such a perfect way to put it. How something as simple as "multiply, add, threshold" repeated billions of times can create systems capable of understanding language is the real magic here!

References

1. Kriesel, D. (2007). A Brief Introduction to Neural Networks. Page 14.

2. Jain, A. K., Mao, J., & Mohiuddin, K. M. (1996). Artificial neural networks: A tutorial. Computer, 29(3), 31-44.

3. Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press. Chapter 6.3.

4. Cybenko, G. (1989). Approximation by superpositions of a sigmoidal function. Mathematics of Control, Signals and Systems, 2(4), 303-314.

5. Arbib, M. A. (2003). The Handbook of Brain Theory and Neural Networks. MIT Press. ISBN: 9780262011976.

6. LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444.