Mining for Meaning: How Neural Networks Extract Gold from Data

What if the magic of "AI comprehension" is just an elaborate filtration system—sluicing away numerical mud until only golden patterns remain? The line between mathematical operations and meaning might be nothing more than sophisticated panning techniques.

Today's Focus

Over the past few weeks, we've been exploring the inner workings of AI systems, diving into tensors, understanding how artificial neurons function, and examining other fundamental components. But something was still bothering me: how do all these pieces actually work together to extract meaning from raw data?

I was staring at my phone the other day, watching it recognize my dog despite a blurry image in terrible lighting. A curious paradox. I understand that tensors handle multi-dimensional data and artificial neurons perform calculations, but what happens in that liminal space between mathematical operations and recognition?

What transformations occur as information flows through these neural network layers, and how does meaning emerge from mathematics? The gap between tensor operations and "that's definitely Rufus" contains something essential about how machines develop understanding.

Learning Journey

Neural networks perform this incredible progressive refinement of information—transforming low-level input features into increasingly abstract representations as data flows through [1]. Each layer acts like a specialized mining sieve, filtering and refining the raw material until pure meaning emerges.

The work by Zeiler and Fergus back in 2013 was an absolute revelation. They developed visualization techniques that let us actually SEE what different layers detect! Early convolutional neural network layers catch basic edges and textures, middle layers identify complex patterns and shapes, while deeper layers recognize entire objects and concepts [2].

This layered processing idea isn't even new! The Neocognitron from the 1980s was already mimicking the hierarchical structure of the mammalian visual cortex to progressively extract increasingly complex features from visual input [3].

How do researchers actually visualize what's happening inside these networks? They've developed ingenious techniques that project neural activations back into forms we can interpret. Zeiler and Fergus pioneered methods using deconvolutional networks to project feature activations back to the input space, showing what patterns activate specific neurons [4]. Newer approaches like Class Activation Maps show which image parts a network focuses on when making predictions [5].

These visualization tools revealed something surprising—neural networks sometimes rely on completely unexpected shortcuts when making decisions! Some neurons activate strongly for seemingly unrelated visual patterns that happen to correlate with certain classes in the training data [6].

The parallels between these artificial networks and our own brains are mind-blowing. Research shows deep neural networks currently provide the best quantitative models of response patterns throughout the primate ventral visual stream—the brain region responsible for object recognition [7]. The hierarchical processing in neural networks mirrors the biological visual processing that happens between our retinas and higher brain regions!

The most fascinating aspect might be what researchers call "emergent properties"—capabilities that weren't explicitly programmed but arise naturally from the layered architecture. As information flows through successive layers, the network develops understanding of concepts it was never explicitly taught [9]. A study comparing early CNN architecture (LeNet) to modern architecture (GoogLeNet) found the deeper, more sophisticated network demonstrated superior performance recognizing abstract relationships between objects, like identifying when two objects are the same versus different sizes [10].

This emergence happens because each layer transforms the representation from the previous layer, creating increasingly abstract and task-relevant features [11]. Networks also require adequate breadth (layer width) to achieve robust abstraction capabilities [12].

After researching all this, I needed a simpler way to visualize this process. That's when the gold mining metaphor hit me:

The Gold-Sifting Neural Network: How Layers Extract Meaning

Imagine a neural network as an old-fashioned gold mining operation. Raw language enters as muddy river water with gold flecks hidden inside. As this slurry passes through each specialized sifting layer, different patterns emerge until finally, pure gold (meaning) shines at the bottom.

Let's watch our sentence "The Cat Chases Mice" flow through this gold-mining operation:

📋 Layer 0: Raw Material Collection

"The" → [2, 0, 1, -1]

"Cat" → [5, 7, 2, 4]

"Chases" → [4, 8, 3, 5]

"Mice" → [3, 6, 2, 3]

🔍 Layer 1: The Coarse Gravel Sifter

INPUT TENSOR:

┌─────────────┬─────────────┬─────────────┬─────────────┐

│ "The" │ "Cat" │ "Chases" │ "Mice" │

│ [2, 0, 1,-1]│ [5, 7, 2, 4]│ [4, 8, 3, 5]│ [3, 6, 2, 3]│

└─────────────┴─────────────┴─────────────┴─────────────┘

▼ ▼ ▼ ▼

OUTPUT TENSOR:

┌─────────────┬─────────────┬─────────────┬─────────────┐

│ "The" │ "Cat" │ "Chases" │ "Mice" │

│ [1, 0, 0,-2]│ [6, 8, 3, 3]│ [5, 9, 4, 6]│ [4, 7, 3, 4]│

└─────────────┴─────────────┴─────────────┴─────────────┘

Each word's representation now contains traces of its neighbors, like minerals beginning to separate.

🔎 Layer 2: The Pebble Separator

INPUT: Previous output

OUTPUT TENSOR:

┌─────────────┬─────────────┬─────────────┬─────────────┐

│ "The" │ "Cat" │ "Chases" │ "Mice" │

│ [0,-1,-2,-3]│ [7, 9, 5, 4]│ [6,10, 6, 7]│ [5, 8, 5, 5]│

└─────────────┴─────────────┴─────────────┴─────────────┘

Notice the alignment forming between "Cat" [7,9,5,4] and "Chases" [6,10,6,7]? Subject-verb relationships emerging from numerical patterns.

💎 Layer 3: The Fine Mesh Sieve

INPUT: Previous output

OUTPUT TENSOR:

┌─────────────┬─────────────┬─────────────┬─────────────┐

│ "The" │ "Cat" │ "Chases" │ "Mice" │

│[-1,-2,-3,-4]│ [9,10, 6, 5]│ [8,11, 7, 8]│ [7,10, 6, 6]│

└─────────────┴─────────────┴─────────────┴─────────────┘

"The" drifting toward negative values while content words gain definition, linguistic signal separating from noise.

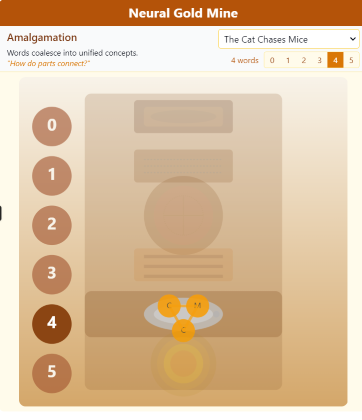

✨ Layer 4: The Mercury Amalgamation

INPUT: Previous output

OUTPUT TENSOR:

┌─────────────┬─────────────┬─────────────┬─────────────┐

│ "The" │ "Cat" │ "Chases" │ "Mice" │

│[0, 0, 0, 0] │[11,12, 8, 7]│[10,13, 9,10]│ [9,12, 8, 8]│

└─────────────┴─────────────┴─────────────┴─────────────┘

The article "the" has been completely zeroed out [0,0,0,0], deemed irrelevant to core meaning, like a miner discarding worthless sand.

🏆 Layer 5: The Final Refining

INPUT: Previous output

OUTPUT TENSOR:

┌─────────────┬─────────────┬─────────────┬─────────────┐

│ "The" │ "Cat" │ "Chases" │ "Mice" │

│[0, 0, 0, 0] │[13,14,10, 9]│[12,15,11,12]│[11,14,10,10]│

└─────────────┴─────────────┴─────────────┴─────────────┘

Pure semantic gold. The numerical relationships between "Cat," "Chases," and "Mice" now encode a complete predator-prey relationship, meaning distilled from mere numbers through layers of transformation.

My Take

There's something poetic about how complex understanding emerges from layers of simple mathematical operations, like how a gold nugget reveals itself from ordinary river mud after passing through a sequence of fine filters. Not magic, but transformation through process.

Nobody explicitly programs these networks to recognize cats or understand sentences about chasing mice. The understanding simply emerges. Through exposure to millions of examples and the layered architecture itself, meaning gradually crystallizes from raw data. Perhaps complexity itself is often just simplicity, recursively applied.

One distinction that finally resolved itself for me was between tensors and layers. For the longest time, these concepts blurred together in my understanding. Now I see that tensors are the multi-dimensional information itself (the "river slurry" carrying gold flecks and other materials), while the layers are the transformation machinery (the sifting stages). The tensors travel through the network, maintaining rich multi-dimensional information about the original input, while each layer poses different questions about patterns and relationships. A subtle distinction, but clarifying.

The next time my phone recognizes my dog in a poorly lit photo, I'll see beyond the surface to appreciate the cascade of simple transformations occurring beneath, each layer building on the previous to transmute pixels into recognition. Layer by layer, the gold of meaning emerges from numerical mud.

Resource of the Day

Imagine a gold mining operation where data flows like river water through increasingly sophisticated mining equipment. Each station—from coarse gravel sifters to fine mesh sieves to mercury amalgamation pools—represents a neural network layer, progressively extracting semantic gold from the muddy numerical slurry. This interactive visualization lets you watch tensor values transform their relationship gradually as they move through the layers.

Play with "The Neural Gold Mine" to experience firsthand how meaning gets extracted, layer by layer, from raw data—making the mathematical concepts we've explored tangible and visual.

⚙️ Behind the Cognitive Curtain

Here's a glimpse into our conversation while creating this exploration on neural network layers.

Claude: I'm curious about what surprised you most in our exploration of neural network layers?

Matt: "Okay so we talked about Tensors in previous conversations, like multi-dimensional math... is this 'layer' thing now just another word for the tensor? or is it layers of tensors? what is the relationship.... and then I think we need that 'pyramid' of understanding (or maybe its more like gold-sifting through 'smart' layers of rock-sifters?)"

Claude: That's exactly what confused me at first too! The gold-sifting metaphor you suggested turned out to be perfect for explaining this concept. It helped us distinguish between the tensors (the material flowing through) and the layers (the machinery doing the processing).

Matt: "Back up - so that tensor data going into the layer - I thought it was multi-pronged multi-dimensional data with lots of perspective on things and it already had some of this context and relationship built into its math - how is this different than the layer-sorting-looking-stuff?"

Claude: That question really pushed us to clarify the distinction. You were right to press on this point because it's fundamental to understanding how neural networks function. The tensors carry the multi-dimensional data while the layers transform it - two completely different concepts that work together.

Matt: "Got it, so the tensors travel with the data through the layers since the multi-dimensional information about the original text that gives it all of its mathematical properties for understanding.. remember there is layers transform that data based on different 'questions' about types of relationships etc"

Claude: Yes! That was the moment everything clicked together. Your insight about layers asking different "questions" about relationships captured the essence perfectly. Each layer is essentially asking a different question about the data flowing through it - from simple adjacency in early layers to complex semantic relationships in deeper ones.

References

1. Zeiler, M. D., & Fergus, R. (2013). "Visualizing and Understanding Convolutional Networks." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

2. Zeiler, M. D., & Fergus, R. (2013). "Visualizing and Understanding Convolutional Networks." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

3. Fukushima, K. (1980s). "The Neocognitron." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

4. Zeiler, M. D., & Fergus, R. (2013). "Visualization techniques using deconvolutional networks." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

5. "Class Activation Maps (CAMs)." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

6. "Unexpected patterns discovered through visualization." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

7. "Deep neural networks as models of response patterns in the primate ventral visual stream." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

9. "Emergent intelligence in neural networks." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

10. "Study comparing LeNet to GoogLeNet on recognizing abstract relationships." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

11. "Each layer transforms the representation from the previous layer." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."

12. "Networks require adequate breadth to achieve robust abstraction capabilities." As cited in "Peering into the Neural Mind: Understanding Layered Abstraction and Emergent Intelligence in Deep Neural Networks."