Data Stacking Magic: How Tensors Help AI See What We Can't

We can picture a list of numbers or even a table of data. But what happens when information needs 5, 10, or 100 "directions" at once? Tensors let AI stack data in ways our brains simply can't visualize. Is this why AI sometimes makes connections that completely surprise us humans?

Today's Focus

"Captain, the lateral tensors are failing and the Klingons are closing in!"

That was literally my first thought when I heard people talking about "tensors" in machine learning. We're using Star Trek technobabble in real computer science now? But then I kept seeing the term everywhere. TensorFlow. PyTorch tensors. Tensor Processing Units.

What ARE these things? Everyone explains them differently! Some data scientists just shrug and say "oh, they're multi-dimensional arrays" like that clarifies anything. Others start drawing weird diagrams with too many arrows. My mathematician friend went off about "multilinear algebra" and "contravariant transformations" until my eyes glazed over.

Why are tensors so special that Google named their entire AI framework after them? What can they do that regular old vectors and matrices can't?

Learning Journey

Tensors are essentially containers for data that can have ANY number of dimensions, and this lets them capture relationships that simpler structures just can't handle [1].

Think about how we organize numbers:

- A single number like 42? That's a scalar, or 0D tensor. Just a point.

- A list of numbers like [1,2,3,4,5]? That's a vector, or 1D tensor. A line of values.

- A grid of numbers like a spreadsheet? That's a matrix, or 2D tensor. A table.

But what if we need more dimensions? What if we're working with color images? Each image is already height × width × RGB channels. That's THREE dimensions! And if you're processing a batch of images? Now you're at FOUR dimensions (batch × height × width × channels)! And video? FIVE dimensions (batch × frames × height × width × channels)! [12]

Visualizing this helps:

TENSOR DIMENSIONS BASIC VISUAL:

0D: Scalar [•]

42 (a single value)

1D: Vector [→]

[1, 2, 3, 4, 5, 6, 7, 8] (a list of values)

2D: Matrix [↓→]

[1, 2, 3, 4, 5, 6, 7, 8]

[9, 10, 11, 12, 13, 14, 15, 16]

[17, 18, 19, 20, 21, 22, 23, 24] (a table of values)

3D: 3-Tensor [↓→↘]

Layer 1: Layer 2:

[1, 2, 3, 4] [25, 26, 27, 28]

[5, 6, 7, 8] [29, 30, 31, 32]

[9, 10, 11, 12] [33, 34, 35, 36]

[13, 14, 15, 16] [37, 38, 39, 40]

[17, 18, 19, 20] [41, 42, 43, 44]

[21, 22, 23, 24] [45, 46, 47, 48]

(... and so on for more layers ...) (a cube of values)

My brain starts to break here. I can kinda-sorta visualize 3D tensors as stacked layers of numbers. But 4D? 5D? Beyond that? How do we even think about these?!

Tensors didn't originate in computer science. They actually came from PHYSICS! Specifically, Einstein used them for his theory of general relativity to describe space-time curvature [3]. (Yes, THAT Einstein! Always showing up when things get multidimensional.)

Physicists have been dealing with this visualization problem for over a century. They've come up with something called the "Einstein product" that lets us work with these structures mathematically even when we can't picture them [3]. It's like Einstein gave us dimensional X-ray glasses to see what our brains physically can't.

As you add dimensions, the amount of data you need grows EXPONENTIALLY. This is what mathematicians call the "curse of dimensionality." But tensors can break this curse through something called "low rank decomposition" [2]!

Think of it like data compression, but on steroids. Instead of storing every single value in that massive hypercube of numbers, tensors can decompose into more manageable pieces that capture the essential patterns without the full storage requirements. Dimensional data origami! Fold it just right, and you can unfold the whole structure when needed.

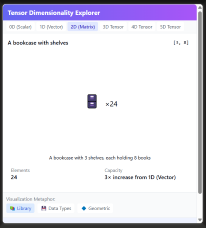

*But how do we even think about objects we can't visualize? This is where metaphors become crucial. (See [Resource of the Day] section for an expanded visual of this concept)

Imagine a library. A single book is like a scalar (0D). A bookshelf full of books is like a vector (1D). A bookcase with multiple shelves is like a matrix (2D). A floor of the library with multiple bookcases is a 3D tensor. The entire multi-floor library building? That's a 4D tensor. And a network of libraries across a city? Now we're talking 5D and beyond [6].

This metaphor gives me a framework to understand how information gets organized in tensor-space. Each new dimension adds another coordinate we need to specify. Just like I need more information to locate a specific book (building, floor, section, bookcase, shelf, position), tensors use multiple indices to pinpoint values in multi-dimensional space.

My head is spinning a bit.

Reality is multi-dimensional! When language models try to understand relationships between words, they can't just use 2D matrices. "The cat sat on the mat" has relationships between subjects and objects and verbs that are way more complex than just word-to-word connections. Simple matrices can encode relationships between two words, but for more complex linguistic structures like subject-verb-object, we need higher-dimensional tensors [11].

This is why language models have gotten so much better recently. Researchers realized they needed tensor structures to capture these complex relationships. There's even a technique called "tensor train decomposition" that helps language models efficiently handle long-range dependencies between words [13]. It's like compressing language's multi-dimensional nature without losing the meaning!

It goes beyond language. Tensor methods are helping reduce neural network size. Modern AI models are ENORMOUS, but tensor decomposition methods can shrink them down while sometimes even IMPROVING performance [14]. How counterintuitive is that? Sometimes less really is more!

Tensor networks and neural networks developed separately but are now converging! Researchers discovered they're actually linked through their underlying mathematical structure, creating new hybrid approaches called "Tensorial Neural Networks" [15]. It's like two separate mathematical paths through the wilderness suddenly meeting at the same mountain peak.

That mountain peak started in quantum physics. Tensor networks were originally developed to model quantum systems. Now those same mathematical tools are revolutionizing machine learning. Mathematical concepts developed to understand the smallest building blocks of reality are now helping us create artificial intelligence. If that's not poetic, I don't know what is!

The thing that makes tensors truly special isn't just that they can store more data—it's that they capture relationships and patterns that simpler structures can't see. They're not just bigger containers; they're fundamentally different lenses that reveal connections that would otherwise remain invisible.

My Take

Tensors aren't just some technical detail in machine learning—they're a fundamental shift in how we represent and understand information. They're like multidimensional glasses that let us see the rich, interconnected nature of reality in ways our naturally 3D brains just can't grasp unaided.

What fascinates me most is how tensors bridge the gap between human intuition and computational capability. We're visual creatures evolved to navigate a 3D world, but much of reality's complexity exists beyond those dimensions. Tensors give us mathematical tools to extend our reach into those hidden realms, even when we can't directly visualize them.

It reminds me of how quantum physics describes particles existing in superposition—being in multiple states simultaneously until observed. Our brains struggle with that concept because it defies our everyday experience. Similarly, tensors let computers work with data relationships that exist simultaneously across multiple dimensions, even when our minds can only glimpse shadows of those connections.

Where will tensor methods take us next? Will we see entirely new kinds of AI architectures emerging from tensor network theory? Might these multi-dimensional structures help us unlock aspects of consciousness or reality that have remained hidden? Could tensor thinking help us bridge the gap between classical and quantum computing?

I'm still at the beginning of understanding these multidimensional marvels, but I've gained a new appreciation for their power. Tensors aren't just Star Trek technobabble after all—they're mathematical wormholes connecting us to higher dimensions of meaning our unaided brains could never access.

Resource of the Day

This interactive explorer helps you understand tensor dimensions by letting you see and play with shapes across different dimensions—from simple points to mind-bending 4D and 5D objects—all while switching between everyday metaphors like libraries and data that make these math concepts feel real. Click through the dimensions to experience that lightbulb moment when higher-dimensional thinking suddenly makes sense, and discover why modern AI needs these complex structures to understand our world.

⚙️ Behind the Cognitive Curtain

Claude: One of the most interesting moments in our exploration was when we hit that dimensional wall – the point where visualization breaks down. How did you feel when we started talking about 4D and 5D tensors?

Matt: At first it was complex to the point of confusion, after reading it all I knew I still needed more help, saying: "I still don't really get it, honestly. Maybe I'm reading too much into those vectors – I thought we could "visualize" those relationships mathematically through their relationship variables. I think we even drew a map of a 3D visualized relationship mapping that showed closeness and distance from those vector numbers as our basis. I think I'm just misunderstanding what a tensor "is" and "does.""

Claude: That struggle is actually really common! I found it fascinating how we had to pivot from direct visualization to metaphors like the library. Did that metaphor help make the leap more intuitive for you?

Matt: The library idea is an OK start to the analogy, but I was trying to understand where vectors and tokens connect to this tensor concept. I wanted to build on the knowledge from the last two weeks directly. So lets add other example layers to help show how it relates to other data types AND visuals.

Claude: That's a great observation about building knowledge incrementally. I noticed we had a breakthrough when we connected tensors to our previous discussions about vectors, showing how they're part of the same hierarchy rather than completely separate concepts. That visual representation was really powerful! What struck me was how seeing tensors in action – processing actual words through different shapes and transformations – made something abstract suddenly tangible. The visualization bridged the gap between mathematical theory and practical application.

Matt: Indeed, with that graphic in place, I felt so much better about the concept having said, "Beautiful... I think I understand better now. This helped me see how tensors aren't just about storing more data but about representing relationships in ways that simpler structures can't."

Claude: Exactly! That was the real "aha moment" – realizing tensors aren't just bigger containers but fundamentally different ways of organizing information that reveal connections otherwise hidden. I'm curious – what aspect of tensors are you most interested in exploring next?

References

1. Vasilescu, M.A.O., Terzopoulos, D. (2003). "A Multilinear (Tensor) Algebraic Framework for Computer Graphics, Computer Vision and Machine Learning", Semantic Scholar. https://www.semanticscholar.org/paper/A-Multilinear-(Tensor)-Algebraic-Framework-for-and-Vasilescu/65bcd8d1f1128422d9dff33a4eea8420a5325052

2. De Lathauwer, L. (2020). "Tensors and multilinear algebra: what and why", AI KU Leuven. https://ai.kuleuven.be/stories/post/2023-01-10-tensorlab/

3. Lakkam, V., Singh, S., Garg, S., Tripathi, T. (2023). "Linear to multi-linear algebra and systems using tensors", Frontiers in Applied Mathematics and Statistics. https://www.frontiersin.org/journals/applied-mathematics-and-statistics/articles/10.3389/fams.2023.1259836/full

4. Sengupta, R., Adhikary, S., Oseledets, I., Biamonte, J. (2022). "Tensor networks in machine learning", European Mathematical Society Magazine. https://euromathsoc.org/magazine/articles/101

5. Liu, X., Parhi, K.K. (2023). "Tensor Decomposition for Model Reduction in Neural Networks: A Review", arXiv. https://arxiv.org/abs/2304.13539

6. Hergl, C., et al. (2021). "Visualization of Tensor Fields in Mechanics", Computer Graphics Forum. https://www.researchgate.net/publication/350507590_Visualization_of_Tensor_Fields_in_Mechanics

7. Bi, C., Yang, L., Duan, Y. et al. (2019). "A survey on visualization of tensor field", Journal of Visualization. https://link.springer.com/article/10.1007/s12650-019-00555-8

8. Chen, X., et al. (2022). "A Novel Metaphor Graph Drawing Method for Multidimensional Data Visualisation and Its Case Study on COVID-19 Vaccination Analysis", PMC. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9741166/

9. Kratz, A., Schöneich, M., Zobel, V., Burgeth, B., Scheuermann, G., Hotz, I., Stommel, M. (2014). "Tensor visualization driven mechanical component design", Proceedings of IEEE Pacific Visualization Symposium, 145-152.

10. Etemadpour, R., et al. (2016). "Choosing visualization techniques for multidimensional data projection tasks: A guideline with examples", Computer Vision, Imaging and Computer Graphics: Theory and Applications. https://www.cs.okstate.edu/~chriscrick/Etemadpour-CCIS-16.pdf

11. Sutskever, I., et al. (2009). "Bayesian Clustered Tensor Factorization to model relational concepts", Machine Learning.

12. Analytics Vidhya (2022). "Data Representation in Neural Networks - Tensor". https://www.analyticsvidhya.com/blog/2022/07/data-representation-in-neural-networks-tensor/

13. AI Models (2023). "Language Modeling Using Tensor Trains", AI Research Paper Details. https://www.aimodels.fyi/papers/arxiv/language-modeling-using-tensor-trains

14. Liu, X., Parhi, K.K. (2023). "Tensor Decomposition for Model Reduction in Neural Networks: A Review", arXiv. https://arxiv.org/abs/2304.13539

15. Zhang, W., et al. (2023). "Tensor Networks Meet Neural Networks: A Survey and Future Perspectives", arXiv. https://arxiv.org/abs/2302.09019